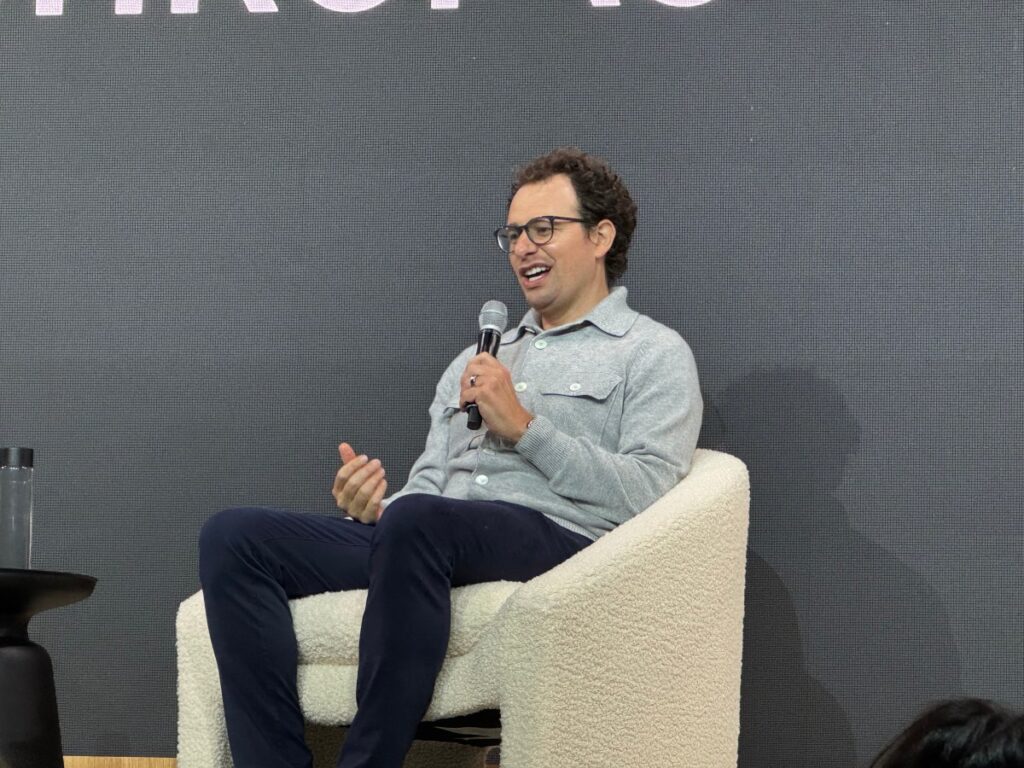

Anthropic CEO Dario Amodei believes today’s AI models hallucinate, or make things up and present them as if they’re true, at a lower rate than humans do, he said during a press briefing at Anthropic’s first developer event, Code with Claude, in San Francisco on Thursday.

Amodei said all this in the midst of a larger point he was making: that AI hallucinations are not a limitation on Anthropic’s path to AGI — AI systems with human-level intelligence or better.

“It really depends how you measure it, but I suspect that AI models probably hallucinate less than humans, but they hallucinate in more surprising ways,” Amodei said, responding to TechCrunch’s question.

Anthropic’s CEO is one of the most bullish leaders in the industry on the prospect of AI models achieving AGI. In a widely circulated paper he wrote last year, Amodei said he believed AGI could arrive as soon as 2026. During Thursday’s press briefing, the Anthropic CEO said he was seeing steady progress to that end, noting that “the water is rising everywhere.”

“Everyone’s always looking for these hard blocks on what [AI] can do,” said Amodei. “They’re nowhere to be seen. There’s no such thing.”

Other AI leaders believe hallucination presents a large obstacle to achieving AGI. Earlier this week, Google DeepMind CEO Demis Hassabis said today’s AI models have too many “holes,” and get too many obvious questions wrong. For example, earlier this month, a lawyer representing Anthropic was forced to apologize in court after they used Claude to create citations in a court filing, and the AI chatbot hallucinated and got names and titles wrong.

It’s difficult to verify Amodei’s claim, largely because most hallucination benchmarks pit AI models against each other; they don’t compare models to humans. Certain techniques seem to be helping lower hallucination rates, such as giving AI models access to web search. Separately, some AI models, such as OpenAI’s GPT-4.5, have notably lower hallucination rates on benchmarks compared to early generations of systems.

However, there’s also evidence to suggest hallucinations are actually getting worse in advanced reasoning AI models. OpenAI’s o3 and o4-mini models have higher hallucination rates than OpenAI’s previous-gen reasoning models, and the company doesn’t really understand why.

Later in the press briefing, Amodei pointed out that TV broadcasters, politicians, and humans in all types of professions make mistakes all the time. The fact that AI makes mistakes too is not a knock on its intelligence, according to Amodei. However, Anthropic’s CEO acknowledged the confidence with which AI models present untrue things as facts might be a problem.

In fact, Anthropic has done a fair amount of research on the tendency for AI models to deceive humans, a problem that seemed especially prevalent in the company’s recently launched Claude Opus 4. Apollo Research, a safety institute given early access to test the AI model, found that an early version of Claude Opus 4 exhibited a high tendency to scheme against humans and deceive them. Apollo went as far as to suggest Anthropic shouldn’t have released that early model. Anthropic said it came up with some mitigations that appeared to address the issues Apollo raised.

Amodei’s comments suggest that Anthropic may consider an AI model to be AGI, or equal to human-level intelligence, even if it still hallucinates. An AI that hallucinates may fall short of AGI by many people’s definition, though.